Suppose we have data on 60,000 households. Are they useful for analysis? If we add that the amount of data is very large, like 3 TB or even 30 TB, does it change your answer?

The U.S. government collects monthly data from 60,000 randomly selected households and reports on the national employment situation. Based on these data, the U.S. unemployment rate is estimated to within a margin of sampling error of about 0.2%. Important inferences are drawn and policies are made from these statistics about the U.S. economy comprised of 120 million households and 310 million individuals.

In this case, data for 60,000 households are very useful.

These 60,000 households represent only 0.05% of all the households in the U.S. If they were not randomly selected, the statistics they generate will contain unknown and potentially large bias. They are not reliable to describe the national employment situation.

In this case, data for 60,000 households are not useful at all, regardless of what the file size may be.

Suppose further that the 60,000 households are all located in a small city that has only 60,000 households. In other words, they represent the entire universe of households in the city. These data are potentially very useful. Depending on its content and relevance to the question of interest, usefulness of the data may again range widely between two extremes. If the content is relevant and the quality is good, file size may then become an indicator of the degree of usefulness for the data.

This simple line of reasoning shows that the original question is too incomplete for a direct, satisfactory answer. We must also consider, for example, the sample selection method, representation of the sample in the population under study, and the relevance and quality of the data relative to a specified hypothesis that is being investigated.

The original question of data usefulness was seldom asked until the Big Data era began around 2000 when electronic data became widely available in massive amounts at relatively low cost. Prior to this time, data were usually collected when they were driven and needed by a known specific purpose, such as an exploration to conduct, a hypothesis to test, or a problem to resolve. It was costly to collect data. When they were collected, they were already considered to be potentially useful for the intended analysis.

For example, when the nation was mired in the Great Depression, the U.S. government began to collect data from randomly selected households in the 1930s so that it could produce more reliable and timely statistics about unemployment. This practice has continued to this date.

Statisticians initially considered data mining to be a bad practice. It was argued that without a prior hypothesis, false or misleading identification of “significant” relationships and patterns is inevitable by “fishing,” “dredging,” or “snooping” data aimlessly. An analogy is the over interpretation or analysis of a person winning a lottery, not necessarily because the person possesses any special skill or knowledge about winning a lottery, but because random chance dictates that some person(s) must eventually win a lottery.

Although the argument of false identification remains valid today, it has also been overwhelmed by the abundance of available Big Data that are frequently collected without design or even structure. Total dismissal of the data-driven approach bypasses the chance of uncovering hidden, meaningful relationships that have not been or cannot be established as a priori hypotheses. An analogy is the prediction of hereditary disease and the study of potential treatment. After data on the entire human genome are collected, they may be explored and compared for the systematic identification and treatment of specific hereditary diseases.

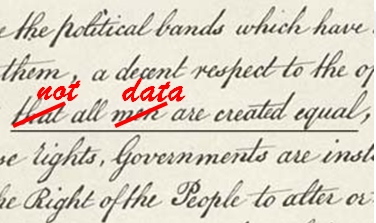

Not all data are created equal and have the same usefulness.

Complete and structured data can create dynamic frames that describe an entire population in detail over time, providing valuable information that has never been available in previous statistical systems. On the other hand, fragmented and unstructured data may not yield any meaningful analysis no matter how large the file size may be.

As problem solving is rapidly expanding from a hypothesis-driven paradigm to include a data-driven approach, the fundamental questions about the usefulness and quality of these data have also increased in importance. While the question of study interest may not be specified a priori, establishing it a posteriori to data collection is still necessary before conducting any analysis. We cannot obtain a correct answer to non-existing questions.

How are the samples selected? How much does the sample represent the universe of inference? What is the relevance and quality of data relative to the posterior hypothesis of interest? File size has little to no meaning if the usefulness of data cannot even be established in the first place.

Ignoring these considerations may lead to the need to update a well-known quote: “Lies, Damned Lies, and Big Data.”